Written by Frank Bouw as part of an internship project at Aveco de Bondt.

Mapping green spaces in cities is crucial for biodiversity, climate adaptation, and creating a healthy living environment. However, mapping greenery is easier said than done. So what role can AI play in accelerating and automating this process?

During my research internship at Aveco de Bondt, I explored how AI within the Tygron platform can help. The focus of the research was to develop an AI model that can automatically recognize hedges in aerial photographs.

My background is in environmental and climate sciences, with some experience in GIS. During my internship, I learned that it is possible to build AI models using Tygron even without an ICT background. Although this study focused on hedges, the same approach can be applied to many other themes.

- Internship research (PDF): https://www.tygron.com/wp-content/uploads/2025/10/Eindverslag_Onderzoeksstage_Frank_Bouw.pdf

- Final presentation (PDF): https://www.tygron.com/wp-content/uploads/2025/10/Eindpresentatie_Onderzoeksstage_Frank_Bouw.pdf

Why Hedges?

Hedges are more than just boundaries. They are vital habitats for species such as hedgehogs and house sparrows, and they contribute to the green appearance of urban areas. With better data on hedges, we can improve models that assess biodiversity or heat stress.

Aveco de Bondt, for example, uses a biodiversity stress test, a GIS tool that maps habitat suitability by combining various input layers. As with that tool, better data leads to more accurate results. However, hedges are often missing from existing datasets: trees are usually well-registered, but lower vegetation is not.

An AI model can make a difference by automatically detecting such elements. Moreover, since AI models analyze aerial imagery, they can also identify greenery in private gardens, providing a more complete picture that includes both public and private green space.

Training Your Own AI Model with Tygron

Tygron recently introduced an AI feature that allows users to build their own object recognition models. For my research, I followed a five-step process:

- Creating Training Data

In QGIS, I selected five training areas in Ede and manually mapped over 1,900 hedges.

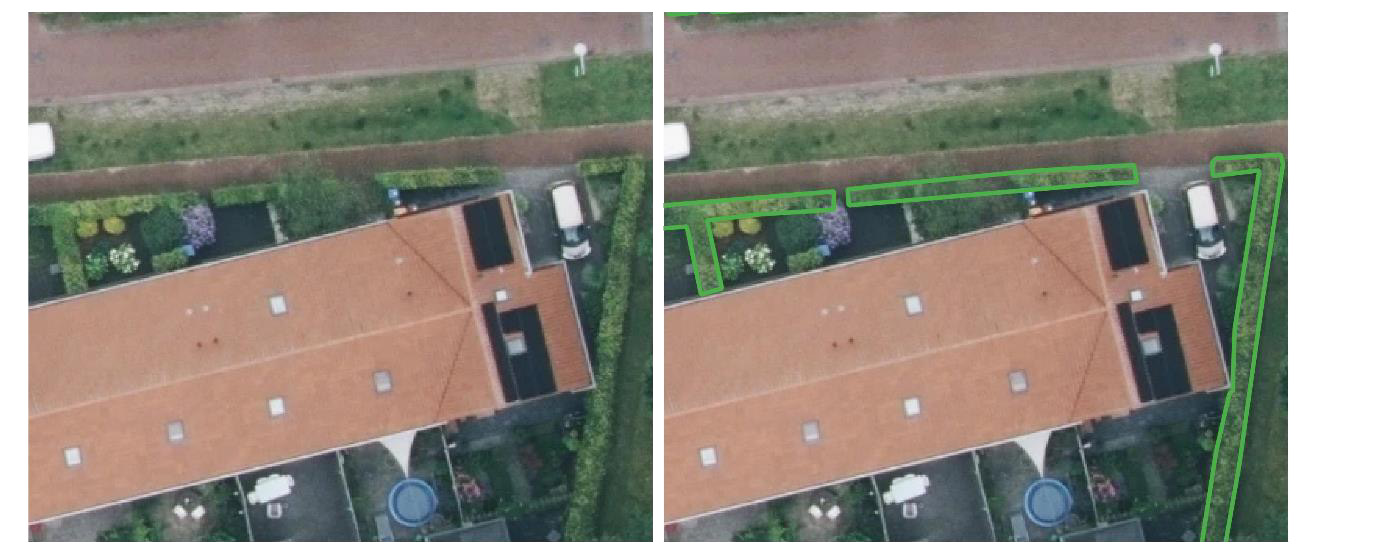

Visualization of drawing hedges. On the left is an image where the hedges haven’t yet been drawn, and on the right is an image where the hedges have been drawn. - Preparing the Data in Tygron

The aerial photos and drawn hedges were imported into Tygron and exported as training and test data to be used for model training. - Training the Model

Using Tygron’s open-source repository, I trained a Mask R-CNN model—a type of AI that can not only recognize but also outline objects. Various settings and iteration counts (epochs) were tested to find the right balance between precision and generalization. - Testing the Model

The trained model was applied to aerial imagery of Ede. Results were compared to the manually mapped hedges to evaluate accuracy. Precision and recall metrics were used: precision measures how many of the detected hedges are correct, while recall measures how many actual hedges were detected. - Validating the Model

Validation was conducted using fieldwork data from two neighborhoods—one with many trees and one with few. It turned out that many hedges were not visible in aerial photos due to several limitations:- Tilt effect: Buildings obscure nearby hedges due to the camera angle.

- Shadow effect: Sunlight causes dark areas that hide vegetation.

- Overhang effect: Trees or structures can cover hedges from view.

In the tree-dense neighborhood, about 50% of the hedges were not visible at all.

Visualization of the slope effect, the shadow effect, and the effect of overhanging trees on the aerial photograph from 2024 (left, cloudy) and 2023 (right, sunny). The red areas indicate the BAG registration of buildings, which indicates the location of the building at ground level. This clearly shows that part of the building’s surroundings is obscured by the angle at which the aerial photograph was taken. Much is also obscured by the overhanging trees, and the aerial photograph on the right also provides a clear image of the shadow effect.

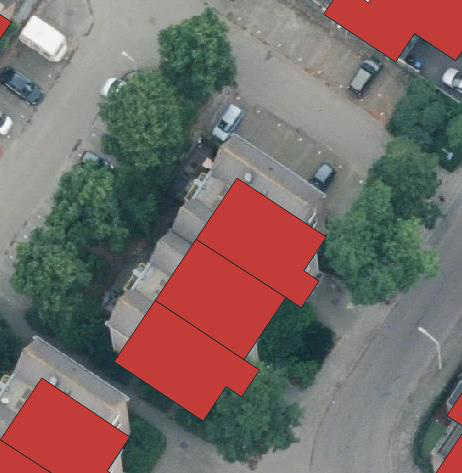

Visualization of the model output compared to the hedgerows mapped during fieldwork.

The blue areas represent the model output and the green outlines represent the plotted hedgerows.

Results

In the neighborhood with few trees, precision and recall were both about 50%; in the tree-dense area, around 25%. This is not yet accurate enough for precise hedge mapping.

The neural network trained in this study is therefore not yet suitable for use in Aveco de Bondt’s analyses.

However, an important result is that it was possible to build and apply an AI model without an ICT background. This shows the potential for collecting new data and enriching existing datasets using Tygron’s AI tools.

Lessons Learned

This research demonstrates that creating and applying an AI model in Tygron is feasible for non-programmers. A key lesson is to carefully consider the source data and the goal beforehand. In this case, many hedges were simply invisible in the aerial imagery.

Therefore, one should always assess whether the available data sources (aerial or satellite) are suitable for the intended goal.

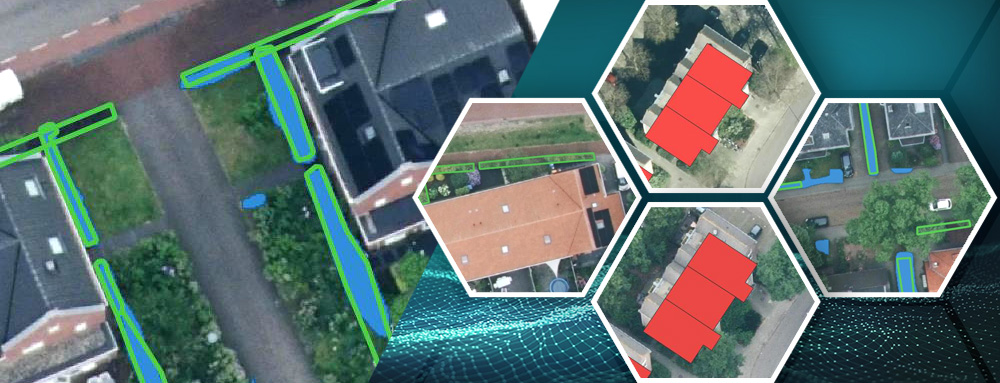

For clearer objects such as solar panels, better results have already been achieved. Tygron’s own Foliage AI model, for example, already performs quite well.

What Is the Tygron AI Suite?

The Tygron AI Suite is a collection of powerful tools that allow urban planners and GIS specialists to train and deploy neural networks directly within the Tygron Platform.

It enables automatic recognition of objects such as trees, shrubs, or other features in satellite or aerial imagery using machine learning. The computations are performed at high speed using GPU supercomputers directly connected to the platform.

The goal of the AI Suite is to make advanced AI technology accessible to users without deep programming knowledge. Tygron emphasizes open source and accessibility: users can use existing open models or upload their own trained neural networks in ONNX format for seamless integration.

Getting Started: Useful Links

- Introduction and overview: https://www.tygron.com/nl/ai/

- Step-by-step guide and scripts: https://previewsupport.tygron.com/wiki/How_to_train_your_own_AI_model_for_an_Inference_Overlay

- Open-source repository & scripts: https://github.com/Tygron/tygron-ai-suite

- Technical session (PDF): https://www.tygron.com/wp-content/uploads/2024/11/AI-aan-de-slag.pdf

These resources make it easy to start identifying green data from imagery on the Tygron platform—or even train and apply your own AI models.